In this article, I will tell you more about the topic of link building and the proper selection and evaluation of sites for link building. Sometimes, I make some documents for my team, and I want to share one of them.

This guide is written to select sites for buying links in the “Blogger Outreach” or “Guest Posting” format with an article that contains 1-2 links in the content to target sites.

Table of Contents

Content requirements

Content should be as readable as possible, not written only for word count, not ChatGPT generated. Use AI Content Detector. This year, many copywriters who previously worked for low fees started generating content with the help of AI services. It’s better to stop cooperating with such people at once.

- Word count: not less than 500 words, which equals 2000-2500 characters

- Location: in the main feed with backlinks from the main page or category

- Language: the same as your target site

- Structure: article with headings, lists, images

- Backlink placement:

- Your link should be the first link in the article

- In the first 3 paragraphs and the first screen

- Links without rel=”nofollow”

- Other links:

- Allowed internal links within the site

- Third-party domains can only be after your links (no more than 1-2 to Wiki format sites)

Filtering sites on link exchanges

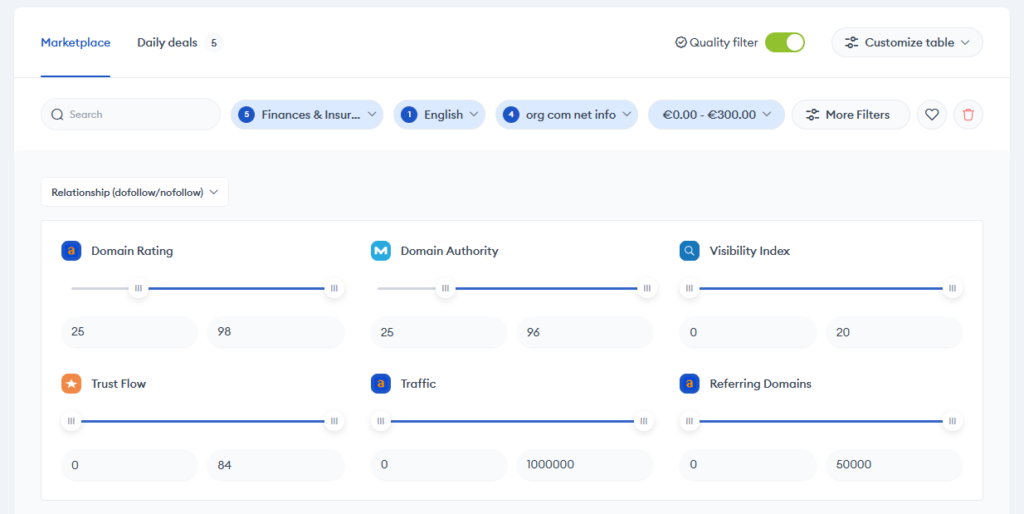

First, we select domains for placement using basic filters and then additionally check them in the Netpeak Checker desktop application:

- DR or DA: more than 25

- Site language: your target site language

- Domain: only Country specific (ccTLDs) or Com, Net, Info, Org (TLDs)

- Categories: General, News, or Target category (don’t restrict too much at this stage)

- Price: up to $300, then there is an overpriced

Site filter

Checking via Netpeak Checker

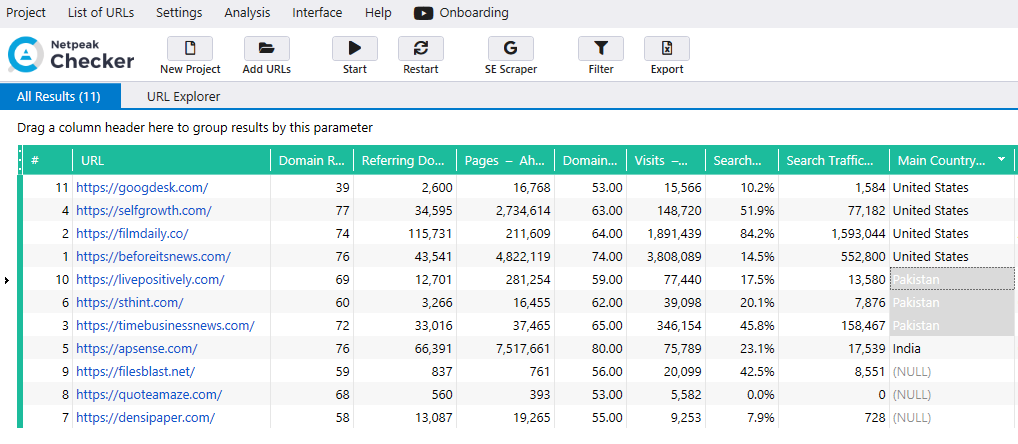

Netpeak Checker is an excellent desktop app by which you can connect many APIs from different SEO services and have an overall picture with data in one table in seconds. In it, we evaluate domains by parameters using plug-in APIs from Ahrefs, Semrush, Similarweb, Netpeak, and MOZ, which can replace each other or duplicate.

- Domain Rating: over 25 (Ahrefs/Semrush)

- Referring Domains: over 500 (Ahrefs/Semrush)

- Ahrefs Pages: over 1k (Ahrefs)

- All visits: over 10k (Similarweb/Netpeak)

- Search Traffic Share: more than 20% (Similarweb/Netpeak)

- Organic Traffic: +1k (Similarweb/Netpeak/Ahrefs)

- Main Country: your target country (Similarweb/Netpeak)

- Main Topic Category: target or about, general or news (Similarweb/Netpeak)

You can additionally check

- Domain Authority: more than 25 (MOZ)

- DR&DA: correlation and difference (MOZ/Ahrefs)

- HTML Lang: target (Netpeak)

Signs of SPAM sites

These are my conclusions based on personal experience and sometimes maybe float side to side. But generally, you can use them as a starting point and find your own parameters for backlink evaluation.

- Imbalance between DA and DR: which indicates that one of them is being boosted artificially

- Spam GEOs: traffic from India, Pakistan and Bangladesh

- A small share of organic traffic: this means that the traffic is artificial, and Google ranks the site poorly

- Few Ref Domains: but high DR, or vice versa

- No organic traffic: the site may be banned by Google

- Few Ahrefs Pages: a small and new site for selling links

- The topic is not defined: or not targeted, it indicates traffic boosting

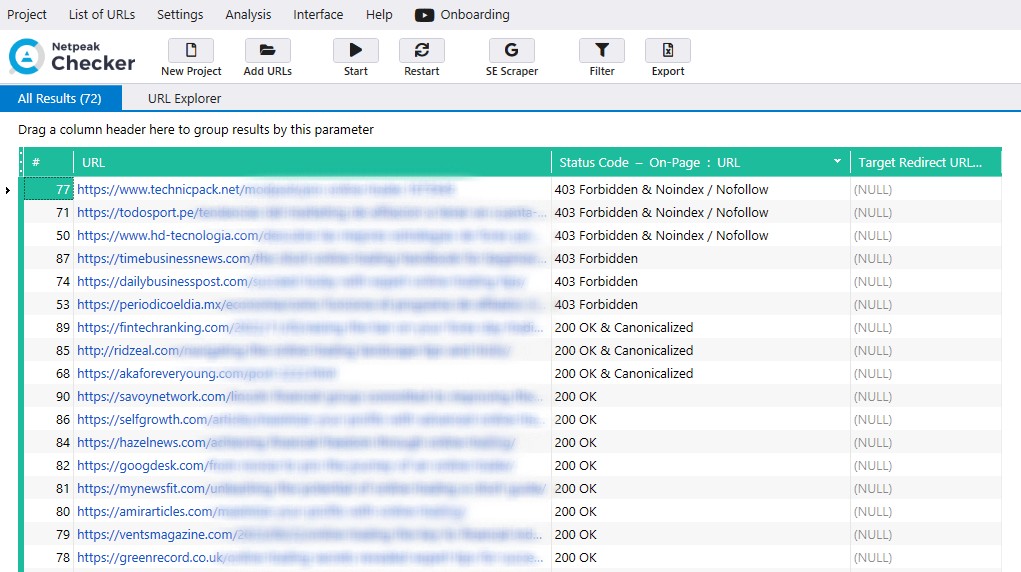

Checking the possibility of indexing

Some webmasters try to cheat even if they get unique articles and money for placement. This attempt is related to the fear of getting banned from Google for selling links or losing organic traffic for waste of link juice through sold links.

Popular methods of closing from indexation

- Server response: 200 for users and 403 for search engines

- Robots txt: Disallow category with the article in robots.txt

- Meta tag: meta name=”robots” content=”noindex” (closed from search engines)

- Rel canonical: link rel=”canonical” href=”https://example.com/” (canonical home page)

Revealing the cheating of webmasters

- Open Netpeak Checker and add a list of URLs

- Choose Onpage -> Status Code

- Choose Onpage -> Target Redirect URL

- Double check 301 status code

- Start parsing and decline all placements without 200 response

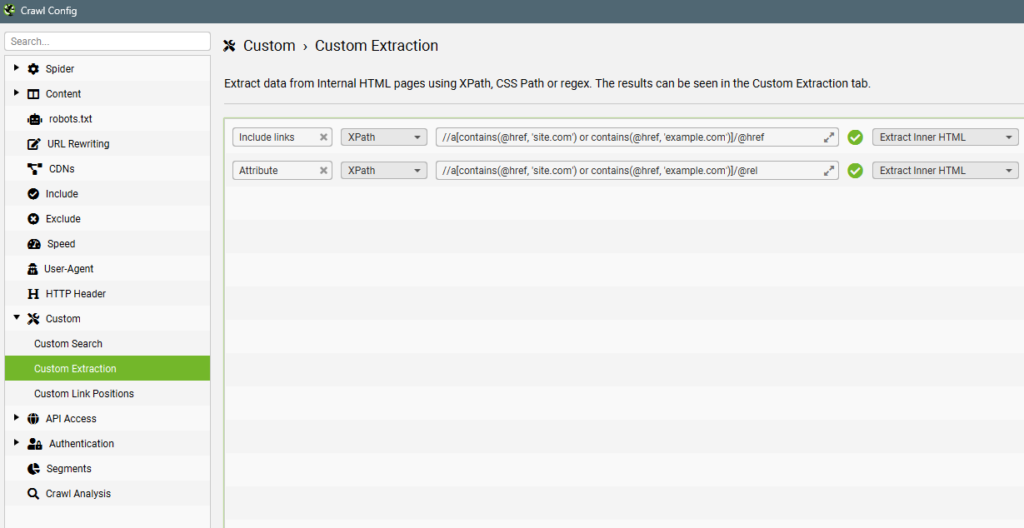

Checking for link availability

To batch-check the availability of a link, we will have to use a site parser, which is the primary tool of many site optimizers. This is Screaming Frog SEO Spider. Some webmasters may forget to add a link, do it with an error, or finally try to hide the link with JavaScript (the user will see it, but the bot will not).

- Launch: Screaming Frog SEO Spider

- Mode: LIST

- Go to: Configuration -> Custom -> Custom Extraction

- Add next:

- XPath //a[contains(@href, ‘site.com‘) or contains(@href, ‘example.com‘)]/@href

- XPath //a[contains(@href, ‘site.com‘) or contains(@href, ‘example.com‘)]/@rel

- Go to: “Custom Extraction” Results tab

Speed up indexing

Next, we speed up the indexing of URLs in Google using a Link_Indexing_bot in Telegram Messenger. This is a third-party solution, but it works, and I don’t know any better yet.

- Collect all URLs in one TXT file

- Require indexation through the Telegram Bot

- Upload your file with links

- Send to indexation

As a result of all these steps, you can find quality sites for your backlinks, save your budget, and get backlinks that will work. These links will be protected from all Google filters since they will not look artificial, come from high-quality sites, and match in GEO and topic.

Leave a Reply